Scaling the Livepeer Network

The Livepeer.com streaming API is powered by Livepeer, a network with uniquely distributed architectural design. At the core of this network is a live ingest and transcoding engine that tries to maintain a robust live streaming workflow in the face of networking issues or hardware failures. This helps the Livepeer.com infrastructure scale in unique ways.

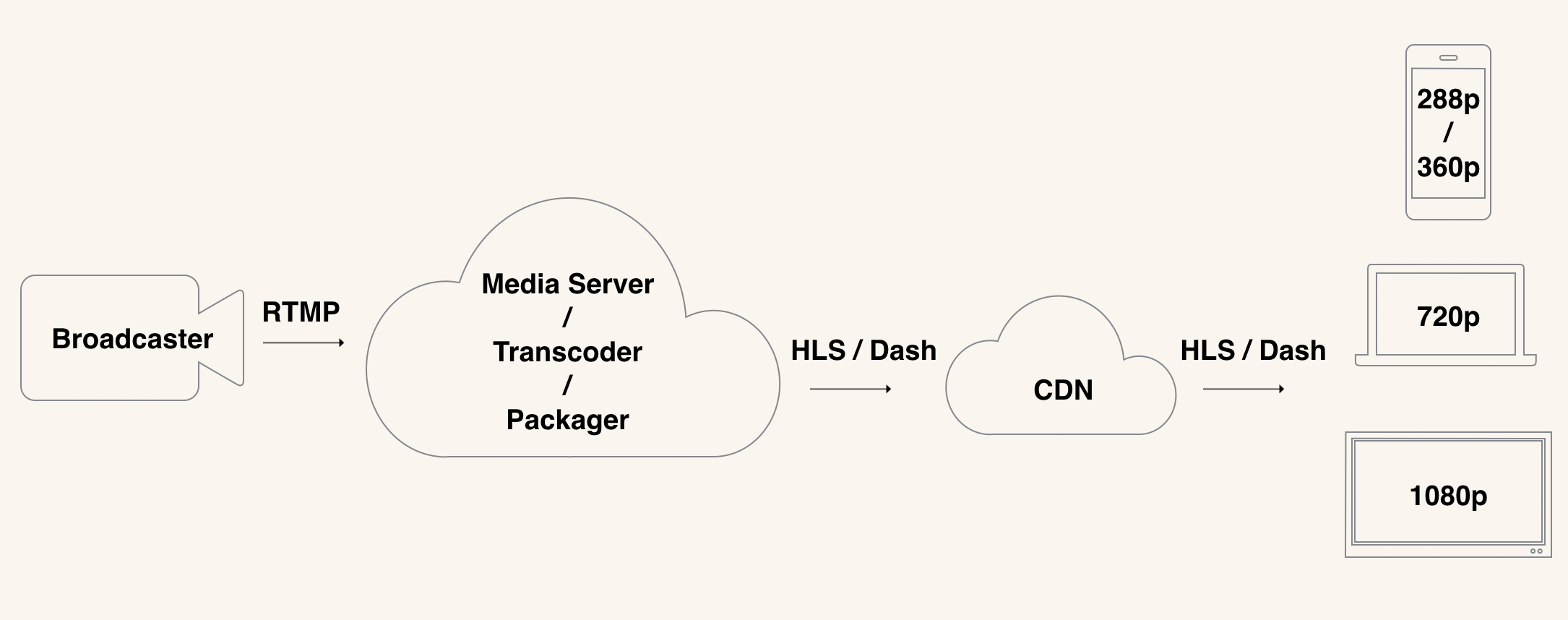

The Simple Live Streaming Setup

In a simple live streaming setup, a broadcaster sends a video stream into a media server. The media server receives the stream, transcodes it into different renditions, packages it up into the delivery format, and sends it to the CDN edge. The video player loads from the edge and plays the video on the user’s device.

This system works well for a small number of concurrent streams. But as we start to scale this system, we will quickly run into performance bottlenecks with the media server and transcoding system – a crucial piece for a live stream to reach a wide audience. As an example, a medium VM on AWS can only transcode 2-3 concurrent streams in a standard bitrate ladder (1080p, 720p, 480p, 360p, 240p). A common solution to this problem is to operate many media server instances and load balance between them. However, this creates another problem – transcoding processes can fail in the middle of a stream if the resources are not carefully managed. The difficult problem of dynamically spinning up and down servers and load balancing long running streams between them make the system brittle. Each small error can cause multiple streams to fail at the same time. Each failure can make the live streams buffer, drop frames, or even completely cut out.

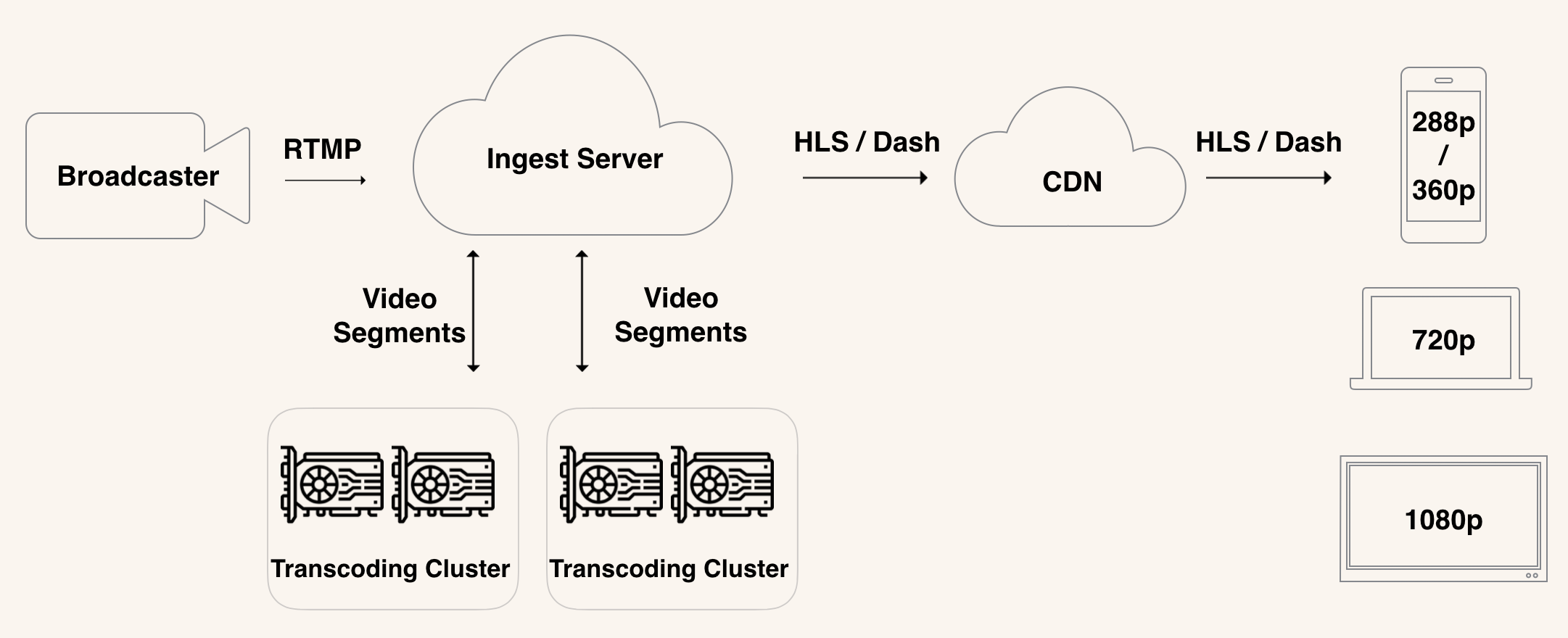

The Distributed Live Streaming Setup

Instead of having each stream handled by a single server, Livepeer takes a distributed approach for ingest and transcoding. Ingest servers create video segments based on incoming keyframe intervals, and each segment is sent to a network of distributed transcoding clusters.

The ingest server keeps track of the latency and performance of each transcoding cluster, so that it can switch to different clusters in the middle of a stream when performance starts to degrade. Since the ingest servers only need to worry about video ingestion and segmentation, they can be strategically placed in high bandwidth environments with geographic diversity, which optimizes for network connection with broadcasters and CDNs. Each ingest server can handle many concurrent streams, because it no longer needs to take on the computation burden of transcoding.

The transcoding cluster has layers of redundancy and failover, so that failures can be quickly retried within the cluster. These transcoding clusters can operate in a variety of different environments – professional data centers, cryptocurrency mining farms, gaming computers to list a few. Most of the current transcoders are GPU based, which reduces transcoding latency by an order of magnitude. Because of the low cost in these environments comparing to public cloud providers, transcoding jobs can even be done multiple times in parallel to ensure high success rate and low latency in the live stream. The Livepeer transcoding software is currently tuned to run in a multi-GPU environment, load balancing across 2-8 GPUs on the same machine. Depending on the types of GPUs, some machines can transcode over 100 concurrent video streams. If internet congestion happens in a certain region, with a specific ISP, or DNS provider, other transcoder clusters will seamlessly pick up the work from the ones that are impacted by the outage. All of this can happen without central coordination, since the ingest servers and transcoders are all making independent routing decisions.

The distributed architecture also poses unique and interesting engineering challenges. For example, when each segment is processed independently, it's challenging for the system to have stream awareness and ensure consistent segment lengths, GOP sizes, and other video format specific configurations. The ingest server / transcoder communication should have proper back-pressure built in, so the performance degradation happens gracefully instead of abruptly. There should also be proper retry policy in place, so a DDoS attacker cannot bring down the whole infrastructure through amplified retries caused by transcoding errors. Livepeer is working on solutions that can address issues like this in both a controlled environment and a decentralized environment where a part of the infrastructure is operated by third-party operators.

Deployment Automation

Livepeer is a decentralized network, and the operation of the nodes that make up the network depends on independent node operators. In order to maintain baseline capacity to regions of the world with high demand, Livepeer operates a portion of the network. The deployment is on top of a heterogeneous environment, ranging from bare metal servers to Kubernetes namespaces. We use tools like Kubernetes, Ansible, and Consul to manage our deployment. This allows us to gain more control in resource management and automatically recover from hardware/software failures.

Conclusion

Live streaming on the internet is a difficult task because users demand for 100% reliability, and the internet is not a very stable environment. Livepeer uses robust software with "cheap and fast" hardware to help us with the goal of achieving high reliability, while keeping the cost low. We are constantly improving our open source software, and we would love to hear your feedback. If you are interested in learning more about Livepeer, please check out the Livepeer primer. If you are interested in working on Livepeer, please take a look at the "senior video infrastructure engineer" position and the "senior software engineer - public network" position.